Mon 31 December 2018

It's the last day of 2018, and a few hours before midnight strikes. Looking back, I didn't get to do as much writing on here for the year as I wanted to (something I'm trying to change in 2019 and beyond). I did, however, manage to find time to do a lot of reading this year; mostly due to long flights, but also from a real effort on my part to get back into the habit.

It seemed like a fitting way to close the year out, then, by listing the books I found insightful and worth grabbing a copy of. I'm opting not to link to Amazon for these, but if you prefer to buy books through there, you should be able to find any of them with a quick search. These are also lightly divided by topics, primarily for some structure.

Privacy

I've generally been a private person, but 2018 in general led to me looking for a better understanding of what privacy really means, both on a consumer and product development level. It's not a topic that can be approached with technology alone; the books below helped me to have an even better understanding of the history of privacy (insofar as US law goes, although much of it is applicable in general).

I found this book while stumbling through the Elliot Bay Book Company in Seattle, and bought it after skimming a few pages. Cyrus does an amazing job outlining the most significant court cases that have shaped the current legal view on privacy, while simultaneously showcasing how the government and general public have failed to keep up with the ethical questions and concerns that have come up in the past few decades as technology has exploded. In general, this was a tough book to put down: it easily messed up my sleep schedule, due to a night or two of reading into the morning hours, and I walked away feeling like I had a better understanding of the privacy landscape.

Rating: 10/10

This book was frustrating, is the best way I can put it. I picked it up on sale after noticing that it was listed as a best seller... and then I regretted it for the following few weeks as I labored through it. The author has a writing style that can be explained best as "stating the obvious for 500 pages". If you have a background in technology, I would skip this; if you're wondering just what's possible with technology, it's probably an okay read, if not a bit hyperbolic.

Rating: 4/10

Much of the internet is opt-in, in a sense - there's generally a privacy policy and terms of service, but it's up to you to read the fine print and decide whether it's good or bad. This book that examines how privacy can be regulated and enforced from the legal side, by requiring product makers to respect privacy in how they build. If you work in tech, touch user data, or have a passing interest in privacy, then this is worth a read.

Rating: 7.5/10

Food

Cooking is a hobby of mine. I wouldn't consider myself professional, but it's amazing for stress relief. It's also a creative outlet for when I can't stand looking at a computer anymore.

This book changed my life: it gave me the final secret to scrambled eggs that I didn't know I needed. While it's worth picking up for this fact alone, the author does a great job illustrating the link between salt, taste, and why so much of what you taste out there tastes bad. I started this on an 8 hour flight and was finished before the end, could not put it down.

Rating: 9/10

A strange book on this list, in that it's easy to look at it more as a cookbook than anything else. I received my copy from a dinner party I attended where the author was giving a presentation, and I guarantee you there's more to it: Turkell does a great job going into the history of vinegar, the different forms out there, and the insane amount of uses it serves. Features a ton of recipes (~100), some of which I still haven't gotten to trying. Highly recommended.

Also, Michael, if you're reading this, my dog literally ate your business card. Hope you found everything you were looking for in Tokyo.

Rating: 7/10

Self

These are books I picked up on a whim, with some level of self improvement in mind.

A collection of articles and essays that help define and increase understanding of emotional intelligence. I originally picked this up to broaden my skills regarding interacting with and leading other people, and I think it helped foster a better way of looking at situations that involve other people. A little bit less about the data and numbers, and more about understanding what it takes to effectively manage and work with people. Recommended.

Rating: 7/10

This is another book where the writing style drove me slightly insane; it definitely felt like the same points being repeated for multiple paragraphs straight. With that said, the content was worth slogging through, and if you can put up with the writing, this is a fun read that'll leave you with some exercises for your brain.

Rating: 6.5/10

2019

Most of these titles were, sadly, only read in the last six months. I'm hoping to make a bigger push in 2019, with a wider range of topics to boot. If you have any recommendations for titles similar to the above, feel free to let me know!

Tue 18 December 2018

Edit as of January 8th, 2019:

Twitter announced that they were modifying their last changes, ensuring that some images remain as PNGs! You can read the full announcement here, which details when an image will remain a PNG. Thanks to this, you may not need the trick below - I'll keep it up in case anyone finds it interesting, though.

Edit as of December 26th, 2018:

Twitter announced recently that come February 11th, 2019, they'll be enforcing stricter conversion logic surrounding uploaded images. Until then, the below still works; a theoretical (and pretty good sounding) approach for post-Feb-11th is outlined here.

A rather short entry, but one that I felt was necessary - a lot of the information floating in search engines on this is just plain wrong in 2018, and I spent longer than I wanted to figuring this out. It helped me to get my twitter avatar a bit nicer looking, though.

Yeah, that happens. If you upload an image for your profile or banner image, Twitter automatically kicks off background jobs to take that image and produce lightweight JP(E)G variants. These tend to look... very bad. There's a trick you can use for posting photos in tweets where, if you make an image have one pixel that's ~90% transparent, Twitter won't force it off of PNG. It doesn't appear to work on profile photos at first glance, though.

Getting around the problem

Before I explain how to fix this, there's a few things to note:

- Your profile photo should be 400 by 400 pixels. Any larger will trigger resizing. Any smaller, you're just doing yourself a disservice.

- Your profile photo should be less than 700kb, as noted in the API documentation. Anything over will trigger resizing.

- Your profile photo should be a truecolor PNG (24bit), with transparency included. Theoretically you can also leave it interlaced but this didn't impact anything in my testing.

Now, the thing that I found is that the 1 pixel transparency trick doesn't work on profile photos, but if you crop it to be a circle with the transparency behind it, this seems to be enough. If I had to hazard a guess, I'd wager that Twitter ignores transparent pixels unless it deems they seriously matter... as in, there's a threshold you have to hit, or something.

Something like this:

Uploading Properly

For some reason, uploading your profile photo on the main site incurs notably more distorted images than if you do it via an API call. I cannot fathom why this is, but it held true for me. If you're not the programming type, old school apps like Tweetbot still use the API call, so changing the photo via Tweetbot should theoretically do it.

If you're the programming type, here's a handy little script to do this for you:

# pip install twython to get this library

from twython import Twython

# Create a new App at https://dev.twitter.com/, check off "sign in via Twitter", and get your tokens there

twitter = Twython('Consumer API Key', 'Consumer API Secret', 'Access Token', 'Access Token Secret')

# Read and upload the image, see image guidelines in post above~

image = open('path/to/image.png', 'rb')

twitter.update_profile_image(image=image)

Keep in mind that Twitter will still make various sizes out of what you upload, so even this trick doesn't save you from their system - just helps make certain images a tiny bit more crisp. Hope it helps!

Mon 03 December 2018

If you've done any iOS development, you're likely familiar with UICollectionView, a data-driven way of building complex views that offer more flexibility than UITableView. If you've dabbled in Cocoa (macOS) development, you've probably seen NSCollectionView and NSTableView... and promptly wondered what era you stepped in to.

I kid, but only somewhat. Over the past few macOS releases, Apple's been silently patching these classes to be more modern. NSTableView is actually super similar to UITableView; auto-sizing rows work fine, and you can get free row-swiping on trackpads. You'll be stuck working around a strange data source discrepancy (a flat list vs a 2-dimensional list), but it can work. NSCollectionView was mired by an old strange API for awhile, but it's now mostly compatible with UICollectionView, provided you do the proper ritual to force it into the new API.

In iOS-land, there's a pretty great project by the name of SwipeCellKit which brings swipe-to-reveal functionality to UICollectionView. It's nice, as one of the more annoying things about moving from UITableView to UICollectionView has been the lack of swipeable actions. In an older project I wound up looking into how difficult it'd be to bring this same API to NSCollectionViewItem; I didn't finish it as the design for the project wound up being easier to implement with NSTableView, but I figured I'd share my work here in case anyone out there wants to extend it further. A good chunk of this has been from digging through various disconnected docs and example projects from Apple, coupled with poking and prodding through Xcode, so I'd hate for it to fade back into obscurity.

Just Give Me the Code...

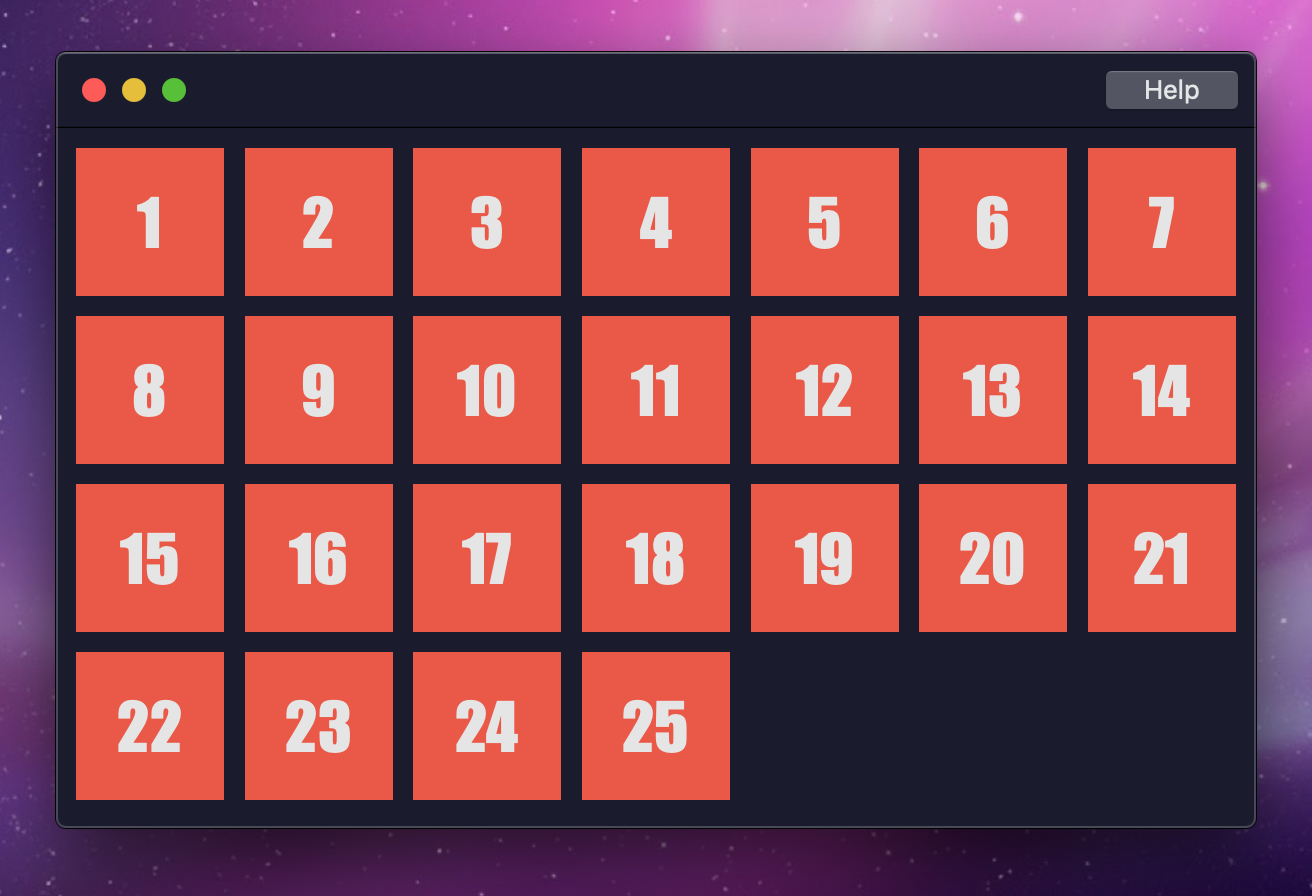

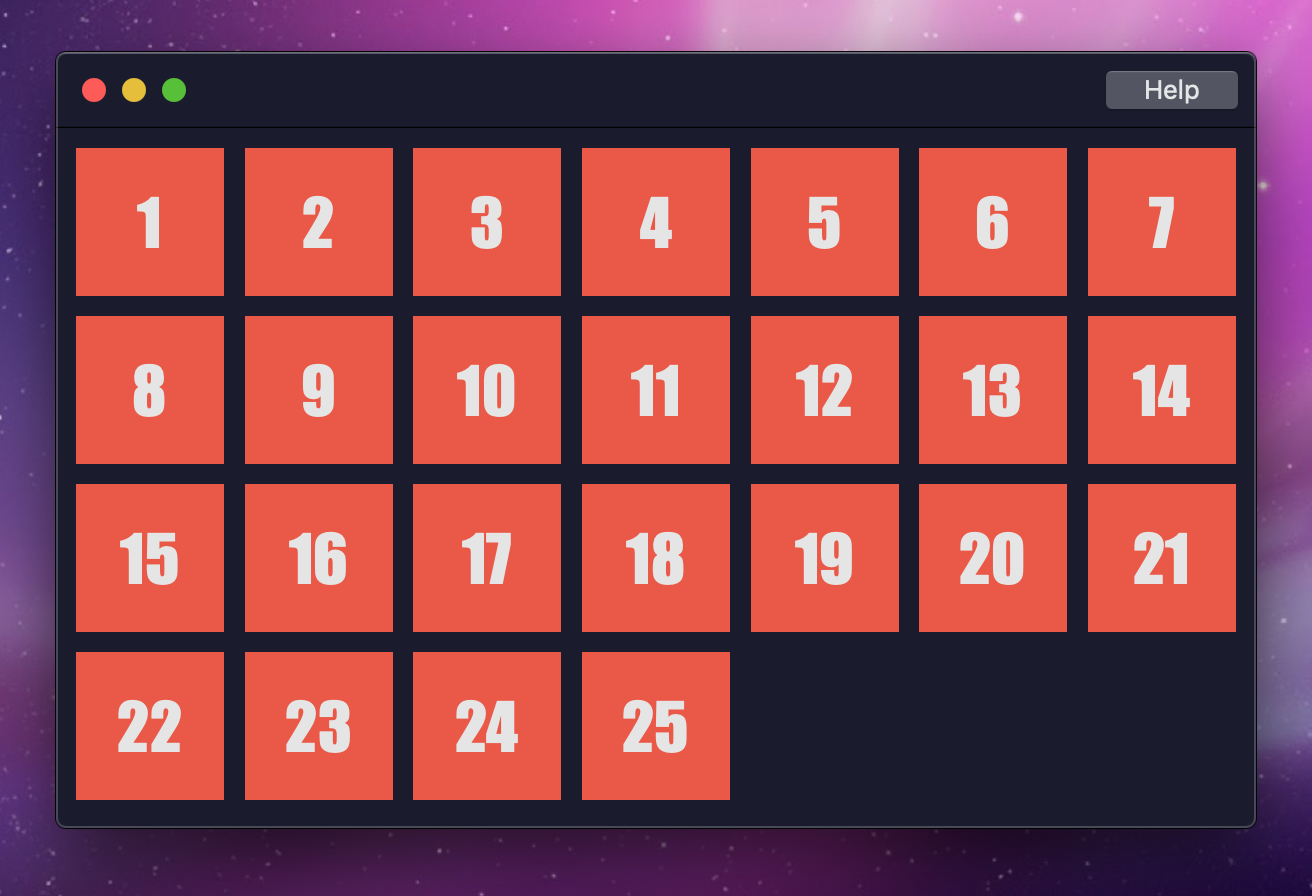

If you're the type who'd rather dig around in code, feel free to jump directly to the project on GitHub. It's a complete macOS Xcode project that you can run and mess around with, in the style of a holiday calendar. It's December, sue me.

Swiping on Mac

Getting this working for Cocoa was a bit cumbersome, as there's a few different ways you can attempt it, all with their own pitfalls.

- Like UIKit, Cocoa and AppKit have the concept of Gesture Recognizers... but they're more limited in general, as they seemingly require a full click before you can act on mouse or gesture movement. I spent a bit of time testing this, and it seems impossible to disable. This means they ultimately don't work, as a trackpad on Mac can be configured to not be click-on-tap. In addition, a few things here seem specific to the Touch Bar found in newer MacBook Pros, which don't particularly help here.

- We could try the old school Mouse tracking

NSEvent APIs, but they feel very cumbersome in 2018 (not that they don't have their place). Documentation also feels very spotty on them.

Ultimately, the best approach I found was going with simple touchesBegan(), touchesMoved(), and touchesEnded() methods on the view controller item. The bulk of the logic happens in touchesMoved(), with the rest existing mostly as flow-control for everything.

Opting In

Before implementing those methods, setting up an NSCollectionViewItem so that it'll report touch events requires a couple of lines of code. I don't use Interface Builder, so this is included in overriding loadView() below; if you're an Interface Builder user, you might opt for this to happen in viewDidLoad() instead.

class HolidayCalendarCollectionViewItem: NSCollectionViewItem {

var leftAnchor: NSLayoutConstraint?

var initialTouchOne: NSTouch?

var initialTouchTwo: NSTouch?

var currentTouchOne: NSTouch?

var currentTouchTwo: NSTouch?

var initialPoint: CGPoint?

var isTracking = false

public lazy var contentView: NSView = {

let view = NSView(frame: .zero)

view.translatesAutoresizingMaskIntoConstraints = false

return view

}()

override func loadView() {

let itemView = NSView(frame: .zero)

itemView.postsFrameChangedNotifications = false

itemView.postsBoundsChangedNotifications = false

itemView.wantsLayer = true

itemView.allowedTouchTypes = [.direct, .indirect]

itemView.addSubview(contentView)

leftAnchor = contentView.leftAnchor.constraint(equalTo: itemView.leftAnchor)

NSLayoutConstraint.activate([

contentView.topAnchor.constraint(equalTo: itemView.topAnchor),

leftAnchor!,

contentView.bottomAnchor.constraint(equalTo: itemView.bottomAnchor),

contentView.widthAnchor.constraint(equalTo: itemView.widthAnchor),

])

view = itemView

}

}

Of note here:

- We need to make sure that

allowedTouchTypes supports direct and indirect touch types. Some of the docs allude to these being used more for the Touch Bar, but in my testing not having them resulted in the swipes not registering sometimes. Go figure.

- We add in a

contentView property here; UICollectionViewCell already has this property, but NSCollectionViewItem is a View Controller and lacks it. Since we need two layers for swiping to reveal something, we'll just follow the UICollectionView API for comfort.

postsFrameChangedNotifications and postsBoundsChangedNotifications are something I disable, as they can make resizing and animating complex NSCollectionViews choppy. I learned of this from some Cocoa developer who threw it on Twitter, where it likely fell into the ether and doesn't surface much anymore. Helped me in early 2018, so I'm not inclined to believe it's changed. Friends don't let friends post this stuff on Twitter.- We keep a

leftAnchor reference to do swipe animations later, and rather than pin the right anchor to the item right anchor, we just map the width.

Capturing the Swipe

With the above in place, touches should properly register. We're primarily interested in mimicing the two-finger swipe-to-reveal that NSTableView has, so our touchesBegan should block anything other than that.

override func touchesBegan(with event: NSEvent) {

if(isTracking) { return }

let initialTouches = event.touches(matching: .touching, in: view)

if(initialTouches.count != 2) { return }

isTracking = true

initialPoint = view.convert(event.locationInWindow, from: nil)

let touches = Array(initialTouches)

initialTouchOne = touches[0]

initialTouchTwo = touches[1]

currentTouchOne = touches[0]

currentTouchTwo = touches[1]

}

When the first two-finger swipe begins, we grab the initial points for comparing to later movements.

override func touchesMoved(with event: NSEvent) {

if(!isTracking) { return }

let currentTouches = event.touches(matching: .touching, in: view)

if(currentTouches.count != 2) { return }

currentTouchOne = nil

currentTouchTwo = nil

currentTouches.forEach { (touch: NSTouch) in

if(touch.identity.isEqual(initialTouchOne?.identity)) {

currentTouchOne = touch

} else {

currentTouchTwo = touch

}

}

let initialXPoint = [

initialTouchOne?.normalizedPosition.x ?? 0.0,

initialTouchTwo?.normalizedPosition.x ?? 0.0

].min() ?? 0.0

let currentXPoint = [

currentTouchOne?.normalizedPosition.x ?? 0.0,

currentTouchTwo?.normalizedPosition.x ?? 0.0

].min() ?? 0.0

let deviceWidth = initialTouchOne?.deviceSize.width ?? 0.0

let oldX = (initialXPoint * deviceWidth).rounded(.up)

let newX = (currentXPoint * deviceWidth).rounded(.up)

var delta: CGFloat = 0.0

if(oldX > newX) { // Swiping left

delta = (oldX - newX) * -1.0

} else if(newX > oldX) { // Swiping right

delta = newX - oldX

}

NSAnimationContext.runAnimationGroup { [weak self] (context: NSAnimationContext) in

context.timingFunction = CAMediaTimingFunction(name: .easeIn)

context.duration = 0.2

context.allowsImplicitAnimation = true

self?.leftAnchor?.animator().constant = delta

}

}

As a drag occurs, this event will continually fire. We grab the newest ("current") touches, and compare where they are in relation to the initial touches. There's a bit of math involved here to get this right, as the Trackpad on Mac isn't quite like a touch screen (normalizedPosition doesn't map to a pixel coordinate). Once we've calculated everything, we can begin animating the top (content) view to reveal the contents underneath.

override func touchesEnded(with event: NSEvent) {

if(self.isTracking) {

self.endTracking(leftAnchor?.constant ?? 0)

}

}

override func touchesCancelled(with event: NSEvent) {

if(self.isTracking) {

self.endTracking(leftAnchor?.constant ?? 0)

}

}

func endTracking(_ delta: CGFloat) {

initialTouchOne = nil

initialTouchTwo = nil

currentTouchOne = nil

currentTouchTwo = nil

isTracking = false

let leftThreshold: CGFloat = 50.0

let rightThreshold: CGFloat = -50.0

var to: CGFloat = 0.0

if(delta > leftThreshold) {

to = leftThreshold

} else if(delta < rightThreshold) {

to = rightThreshold

}

NSAnimationContext.runAnimationGroup { [weak self] (context: NSAnimationContext) in

context.timingFunction = CAMediaTimingFunction(name: .easeIn)

context.duration = 0.5

context.allowsImplicitAnimation = true

self?.leftAnchor?.animator().constant = to

}

}

The last necessary pieces are just handling when a drag event ends or is cancelled. We'll forward both of those events into endTracking, which determines the final resting state of the drag animation: if we've dragged far enough in either direction, it'll "snap" to the threshold and hang there until a new swipe gesture begins.

Taking It Further

While the above implements swiping, it's not... great yet. As I noted over on the GitHub repository, this could definitely be tied into a SwipeCellKit-esque API (or just into SwipeCellKit entirely). It also doesn't take drag velocity into account, as calculating it on macOS isn't as simple as iOS, and I ended up scrapping this before using it in a shipping product. Feel free to crib whatever code or assets as necessary! If you end up building on this or taking it further, a line of credit would be cool.

Fri 29 June 2018

Two Years and GUIs

One of the big (and kind of annoying) discussions that's been bantered about in the tech world over those two years has been whether Electron, a web-browser-masquerading-as-native-app project, is a plague on society or not. Resource wise, it's probably got some argument there, but in terms of productivity it's hands down the king of the castle - effectively trading memory pressure and less focus on platform conventions in favor of just shipping something out the door. You can open and scan any thread on Hacker News or /r/programming and find people bemoaning this repeatedly.

I wouldn't keep those threads open, for what it's worth - there's generally little worth reading in them, beyond people on both sides completely misunderstanding one another. The tl;dr for pretty much every thread is: more traditional native developers tend not to understand web development, or they look down upon it as easier and less worthy of respect. Web developers tend to favor shipping faster to a wider audience, and being able to implement things across (just about) any platform. You'll see some native developers go on about Qt/GTK and co as ideal native approaches (they're not), or advocating tripling your development efforts and re-implementing a UI across n platforms (why would you bother to do this for most commerical projects? Do you enjoy wasting time and money?).

With that all said, I had reason to build some stuff in Rust recently, and wound up wanting to throw together a basic UI for it. Rust is a wonderful language, and I genuinely enjoy using it, but it's very clear that the community doesn't have a GUI focus. I figured I'd end up basically packaging together some web view (Electron or [insert your choice here]), but wanted to see how easily I could finagle things together at a native level. Since I haven't written anything in some time, I figured this would be a good fit for a super short post.

Going Down a GUI Rabbit Hole

I use a Mac pretty much exclusively, and am pretty familiar with Cocoa, so jumping to that from Rust seemed pretty reasonable. It's also ridiculously simple, thanks to Objective-C - the C interop in Rust makes this fairly transparent, provided you're willing to dabble in the unsafe side of things. What I came up with was a mix of rust-objc and rust-cocoa, from core-foundation-rs, along with some usage of Serde for a quickly hacked together CSS framework.

Given the following example styling...

{

"window": {

"backgroundColor": {"r": 35, "g": 108, "b": 218},

"defaultWidth": 800,

"defaultHeight": 600

},

"root": {

"backgroundColor": {"r": 35, "g": 108, "b": 218}

},

"sidebar": {

"backgroundColor": {"r": 5, "g": 5, "b": 5},

"width": 200,

"height": 400,

"top": "root.top",

"left": "root.left",

"bottom": "root.bottom"

},

"content": {

"backgroundColor": {"r": 35, "g": 108, "b": 218},

"width": 100,

"height": 300,

"top": "root.top",

"left": "sidebar.right",

"right": "root.right",

"bottom": "root.bottom"

}

}

A basic app could be constructed like so:

extern crate shinekit;

use shinekit::*;

fn main() {

shinekit::run(vec![

StyleSheet::default(include_str!("styles/default.json"))

], App::new("App", View::named("root").subviews(vec![

View::named("sidebar"),

View::named("content")

])));

}

The declarative approach to the UI is inspired by React (and co). I threw the resulting package on Github as Shinekit, in case anyone out there finds it interesting and would want to hack on it themselves. Since it's basically hacking through Objective-C, I've got a theory that it could be wired up to Microsoft's port of Objective-C for Windows, which ultimately creates native UWP apps.

Of note, some things for GUI programming are a pain in the ass in Rust - e.g, parent and child relationships. Shinekit pushes that stuff over to Cocoa/Objective-C where possible, rather than tackling it head-on. In a sense, it's cheating - but it creates a slightly nicer API, which (in my experience) is important in UI development.

What's Next?

Well, if you're a native fan, you're gonna hate to hear that yes, I did wind up just throwing a web browser into the mix to ship. Goal wasn't to build a full GUI framework.

With that said... time permitting I'll probably hack on this some more, as the existing GUI options in Rust don't really fit my idea of what a GUI framework should look and function like. As Rust becomes more popular, a decent GUI approach (even for small toolkit apps and the like) would be great to have. If or when this becomes more mature, I'd throw it up on crates.io as something to be used more widely.